Data Lifecycle Management with Azure Storage

Introduction

Azure Storage provides the foundation for a lot of the services available on the Azure Cloud platform. In this post I’m going to walk you through the data lifecycle management features of Azure Storage Accounts.

Components of a Data Lifecycle Management

In a nutshell, DLM refers to a policy-driven approach that can be automated to take data through its useful life. But what is exactly the definition of data’s life? Imagine that data is captured and stored on an Azure storage account. The new data will either be accessed frequently for further processing, reporting, analytics or some other use. Or, it will sit there for a long time and eventually become obsolete. The data may have logic and validations applied to it throughout either process. But at some point, it will come to the end of its useful life and be archived, purged, or both. This is where automatic data lifecycle management in Azure can help customers to optimize the size and costs of their storage accounts in the cloud. Azure Blob Storage lifecycle management (currently in public Preview) offers a rich, rule-based policy which you can use on GPv2 and Blob storage accounts to transition your data to their appropriate access tiers or expire at the end of its lifecycle. Lifecycle management policy helps you:

- Transition blobs to a cooler storage tier (Hot to Cool, Hot to Archive, or Cool to Archive) to optimize for performance and cost

- Delete blobs at the end of their lifecycles

- Define rules to be executed once per day at the storage account level

- Apply rules to containers or a subset of blobs (using prefixes as filters)

Azure Storage Tiers

But first let’s have a word about the different tiers within Azure Storage / an Azure Storage Account. To support tiering, you need a Blob Storare General Purpose V2 (GPv2) Storage Account. However, if you have a GPv1 account, you can convert it easily to GPv2 by using the following methods:

Azure CLI

az storage account update --access-tier Cool -n miruaccfoeus2 -g rg-stafailovertests-us

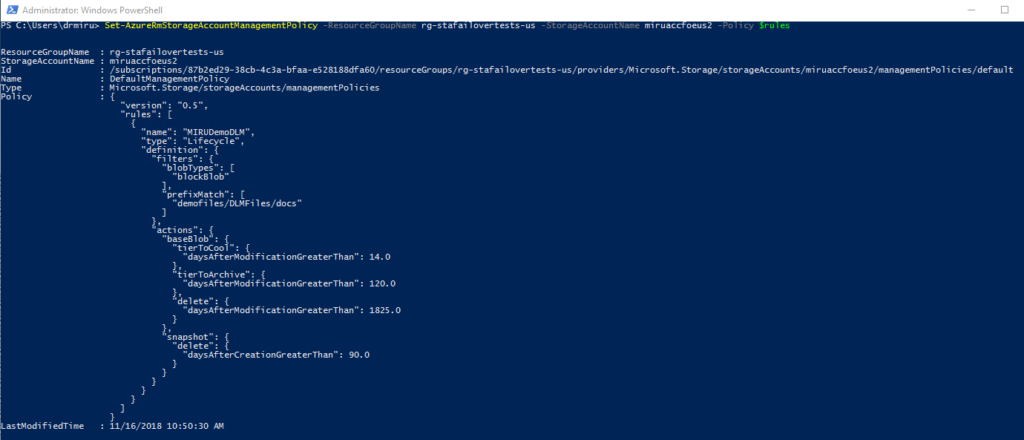

PowerShell

Set-AzureRmStorageAccount -ResourceGroupName <resource-group> -AccountName <storage-account> -UpgradeToStorageV2

Here’s a short overview of the different tiers.

| Tier | Tier Level | Intended Use | Use cases | Access times |

| Premium Storage (Preview) | Storage Account | High frequently access data require low access latencies |

-online transactions</p>

-video rendering -static web content</td> | Micro- to Milliseconds | </tr>

| Hot Storage | Storage Account | Blob | Frequently accessed data (default) | -standard data processing | Milliseconds |

| Cold Storage | Storage Account | Blob | Infrequently accessed data, stored for at least 30 days |

-media archive</p>

-short term backups -DR -raw telemetry data</td> | Milliseconds | </tr>

| Archive Storage | Blob | Rarely or never accessed data stored for at least 180 days |

-archival sets</p>

-long term backup -compliance archive sets</td> | <15 hours | </tr> </tbody> </table> </div>