Guidance for a SCVMM / Hyper-V deployment in a locked down, multi-forest environment

This is a “lessons learned” post and a follow up to an earlier post on “SCVMM in multi-forest environments” to keep others away from “trial and error” when integrating SCVMM with Hyper-V in a secured environment. So what does “secured” and “locked down” mean in this context? Let me first describe the environment and use case a bit. The options to change the architecture below where limited due to customer’s internal security regulations.

So you might ask, why don’t you just enable “forest wide” authentication and why don’t you place VMM into the same network security zone as the Hyper-V hosts? Well humm.. just because I was not allowed to J. So I searched for documentation on this particular scenario -> fail.

Multi-forest in general is documented, but not the specific trust authentication mode. Additionally the required ports for SCVMM documentation is not 100% complete. Enough said… let’s see how to get this thing up and running.

Environment

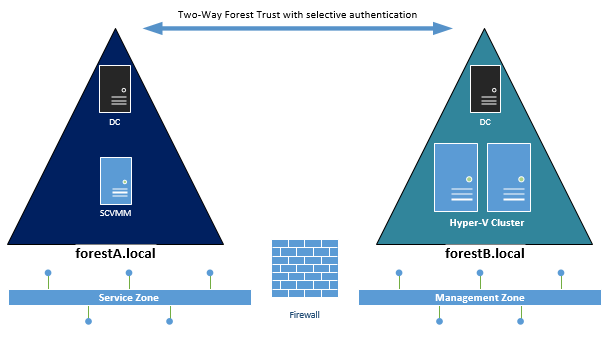

###

- SCVMM in Forest A (Service Forest)

- Hyper-V Nodes in Forest B (Fabric Forest)

- Two-Way Trust with “selective authentication”

- SCVMM and Hyper-V Hosts in different VLANs, segregated by Layer 7 Firewall

Selective forest authentication in a nutshell

Enabling this option on the forest trust properties requires any user / group in the trusting forest to be granted explicitly on computer objects with the “allowed to authenticate” permission on the trusted forest.

For detailed information on that see the following TechNet documentation: https://technet.microsoft.com/en-us/library/cc755844%28v=ws.10%29.aspx

Issues to solve

| # | Issue | Solution |

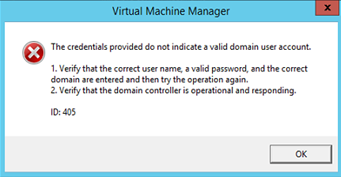

| 1 |

Unable to verify RunAs credentials account for Host Management with error: ID 405 </p>

| Grant the RunAs account from Forest B “allowed to authenticate” permission on the SCVMM computer object in Forest A | </tr>

| 2 |

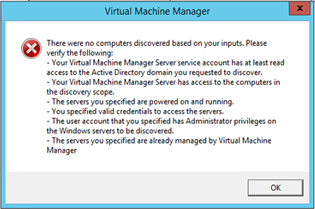

Unable to add Hyper-V Hosts and Clusters with error: </p>

Unable to connect via WMI. Hostname: hv001.forestA.local |

The TechNet documentation on “required ports for SCVMM” does mention the following ports for SCVMM Agent installation: </p>

TCP 445

TCP 135

TCP 139

TCP 80

Assuming that no firewall admin in the world will allow RPC dynamic ports >1024 from SCVMM to all Hyper-V nodes. we have to fix the RPC port range. In this example I used: 51000-51500, and request the firewall rule accordingly

Note! The endpoint mapper (tcp 135) and the RPC port range must also be opened to any CNO (Cluster named Object) resp. to it’s IP address |

| 3 |

Unable to add Hyper-V Hosts and Clusters with error: </p>

|

| </tr>

| 4 |

Cluster Service suddenly stops on certain nodes after some days of operation. Trying to restart the service results in error: </p> RPC Endpoint mapper / no endpoint available</td> | Increase the RPC port range to a minimum of 200 ports. Cluster-intercommunication also requires RPC ports. Depending on other management tasks and interfaces the documented minimum range of 100 ports can become too small. | </tr> </tbody> </table> </div>

</td>

</td>

</td>

</td>